Google I/O 2023 Highlights: Unveiling Google's Latest Innovations and Improvements

Get a glimpse of the latest tech trends and see how Google is shaping the future of technology. Find out how you can get the most out of Google I/O.

In this blog, I will cover all the latest developments happening within Google, as presented at Google I/O. I won't delve deeply into the workings and technology, as that would make the blog excessively long. Instead, I will provide an overview of the topics discussed and explain them as concisely as possible.

I will mainly cover the new software developments in the field of AI and not the hardware and android updates.

TL;DR

Google I/O 2023 showcased AI advancements such as LaMDA, PaLM2, Imagen, and new AI features in Google Search. They also introduced AI integrations in products like Gmail, Google Maps, and Google Photos, along with the launch of Bard, an AI chatbot. Other announcements include AI tools for developers like Vertex AI and Project Tailwind, an AI-first notebook.

Let's learn what Google I/O is all about.

What is "Google I/O"?

Google I/O is an annual developer conference held by Google in Mountain View, California. The name "I/O" is taken from the number googol, with the "I" representing the 1 in googol and the "O" represents the first 0 in the number.

A googol is the large number 10100. In decimal notation, it is written as the digit 1 followed by one hundred zeroes: 10,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000.

The conference also features a number of educational sessions and workshops, where developers can learn about the latest technologies and best practices.

In addition to being a valuable resource for developers, Google I/O is also a great opportunity for Google to connect with its users and get feedback on its products. The conference is open to the public, and there are a number of activities and events that are available to all attendees.

Google I/O 2023 was a two-day event held on May 11-12, 2023, at the Shoreline Amphitheatre in Mountain View, California. The event was attended by over 50,000 developers from around the world.

A keynote was addressed by Sundar Pichai, CEO of Google, where he announced new products and features for Google's various platforms.

💡 What were my expectations from Google I/O 2023?

We all know that Google has been a leader in the field of artificial intelligence (AI) for decades. In 2017, they released the revolutionary transformer model, which is now widely used in natural language processing (NLP) tasks such as machine translation, text summarization, and question-answering.

You can checkout the amazing paper by folks at Google titled "Attention is All You Need" from here.

It was therefore no surprise that Google's annual developer conference, Google I/O 2023, was full of announcements about new AI advancements.

Some of the most notable announcements included:

The release of PaLM2, a 540-billion parameter LLM that can generate text, translate languages, write different kinds of creative content, and answer your questions in an informative way.

The release of Imagen, a new AI model that can generate realistic images from text descriptions.

The announcement of new AI features for Google Search, including the ability to answer your questions in more natural language and to provide more relevant results.

The launch of new AI tools for developers, including the Google AI Platform and the Google AI Test Kitchen.

These are just a few of the many AI advancements that were announced at Google I/O 2023. It is clear that Google is committed to leading the way in the field of AI, and these advancements are sure to have a significant impact on the way we live and work.

A significant part of Google I/O focused on integrating Generative AI into their products. So, what exactly is Generative AI?

🤖 Understanding Generative AI

Generative AI as the name suggests is a type of artificial intelligence (AI) model called Large Language Model (LLM) that can create new content, such as text, images, audio, and video. Generative AI models are trained on large datasets of existing content, and they use this data to learn the patterns and rules that govern how that content is created. Once a generative AI model has been trained, it can use this knowledge to create new content that is similar to the content it was trained on.

Here is a nice video on the recent updates in Generative AI by Google.

📲 AI integrations in Google products

📪 Gmail's new "Help me write"

This AI feature will generate the entire email for you. All you have to do is specify the details of the email in the prompt. It can further pull context from the previous email.

This is something I will personally use quite often. Currently, there are some excellent tools in the form of browser extensions built on OpenAI's GPT model that serve the same purpose. However, it appears that this new feature from Google could be detrimental to those tools.

🌍 Immersive View for routes in Maps

Google Maps' Immersive View is being expanded to allow users to see their entire route in advance, whether they're walking, cycling, or driving. This will allow users to get a better feel for their route and make informed decisions about where to go. Immersive View will begin to roll out over the summer and will be available in 15 cities by the end of the year.

✏️ Magic Eraser for Google Photos

It is an AI-powered tool that allows users to remove unwanted distractions from photos. Magic Editor is a new AI-powered tool that will allow users to do much more with their photos, such as repositioning objects, changing the sky, and more. Magic Editor is expected to roll out later this year.

✨ The second-gen PaLM model: PaLM 2

PaLM is a large language model (LLM) from Google AI, but it is not the only LLM that Google has developed. Other LLMs from Google include LaMDA, Meena, and T5. PaLM is the largest and most powerful LLM that Google has developed to date, but it is still under development.

And in this I/O, Google announced a more advanced version of that called PaLM2.

PaLM2 is a second-generation large language model (LLM) from Google AI. It is built on the same architecture as PaLM, but it is trained on a larger dataset of text and code.

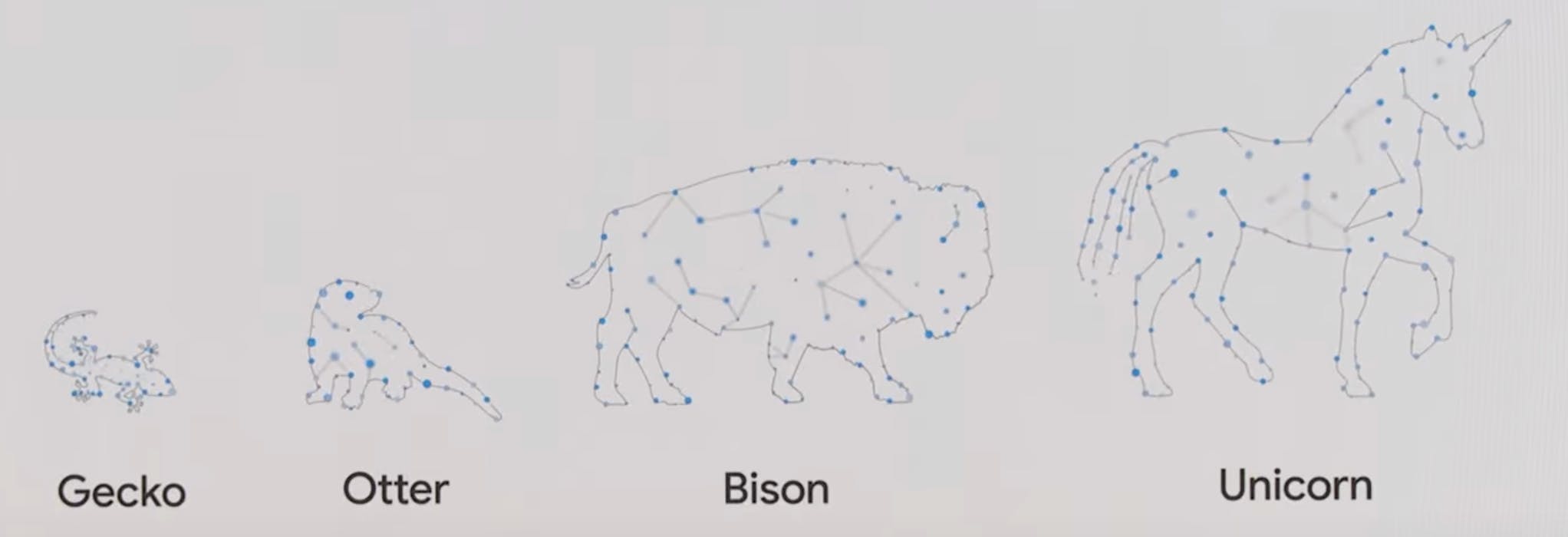

PaLM2 is a family of models, each with its own strengths and weaknesses. Here are some of the different models in PaLM2:

Gecko: Gecko is the smallest model in PaLM2. It is fast and efficient, but it is not as powerful as the other models.

Otter: Otter is a medium-sized model in PaLM2. It is more powerful than Gecko, but it is not as powerful as the larger models.

Bison: Bison is a large model in PaLM2. It is more powerful than Otter, but it is not as powerful as the largest model.

Unicorn: Unicorn is the largest model in PaLM2. It is the most powerful model in PaLM2, but it is also the slowest and least efficient.

PaLM2 is particularly good at:

Math: It can solve math problems, such as algebra, calculus, and geometry. It can also generate mathematical proofs.

Coding: PaLM2 can generate code that is both correct and efficient. It can write code in a variety of programming languages, and translate between them.

Learning & Reasoning: PaLM2 is able to learn new tasks and concepts quickly. It can be trained on a new task with just a few examples.

Translating languages: PaLM2 can translate languages with high accuracy. It can translate between any pair of languages that it has been trained on.

PaLM2 models perform even better when fine-tuned for particular applications. Some of my favorite fine-tuned models are Sec-PaLM and Med-PaLM2. Sec-PaLM can explain the behavior of potentially malicious scripts, and better detect which scripts are actually threats to people and organizations. Med-PaML2 can answer questions and summarize insights from a variety of dense medical texts.

Google is also working on another next-generation foundation model called Gemini. Gemini is being designed to be multimodal, which means that it will be able to process and understand information from a variety of sources, such as text, code, images, and audio. This will make Gemini much more versatile than previous AI models, and it will allow it to be used in a wider range of applications.

💬 Bard

The part of Google I/O that I was most excited about was the launch of Bard. It was initially rolled out to only US and UK, but now it's publicly available and I am really loving it.

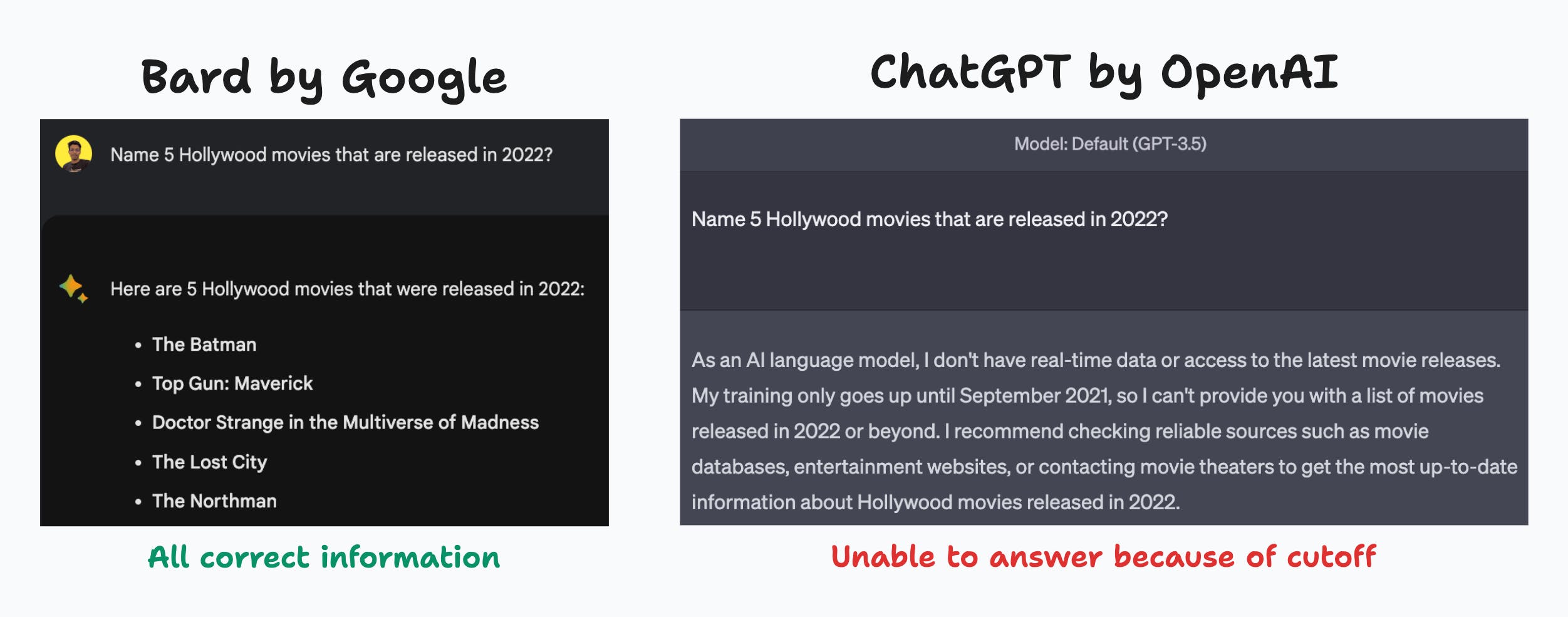

A very popular alternative that we all know is ChatGPT. Here are some of the features that Bard is providing which is not yet available in ChatGPT:

Information Cutoff: The information cutoff for ChatGPT is September 2021. Whereas Bard can perform an internet search and give a response accordingly. This allows you to get the latest information and updates.

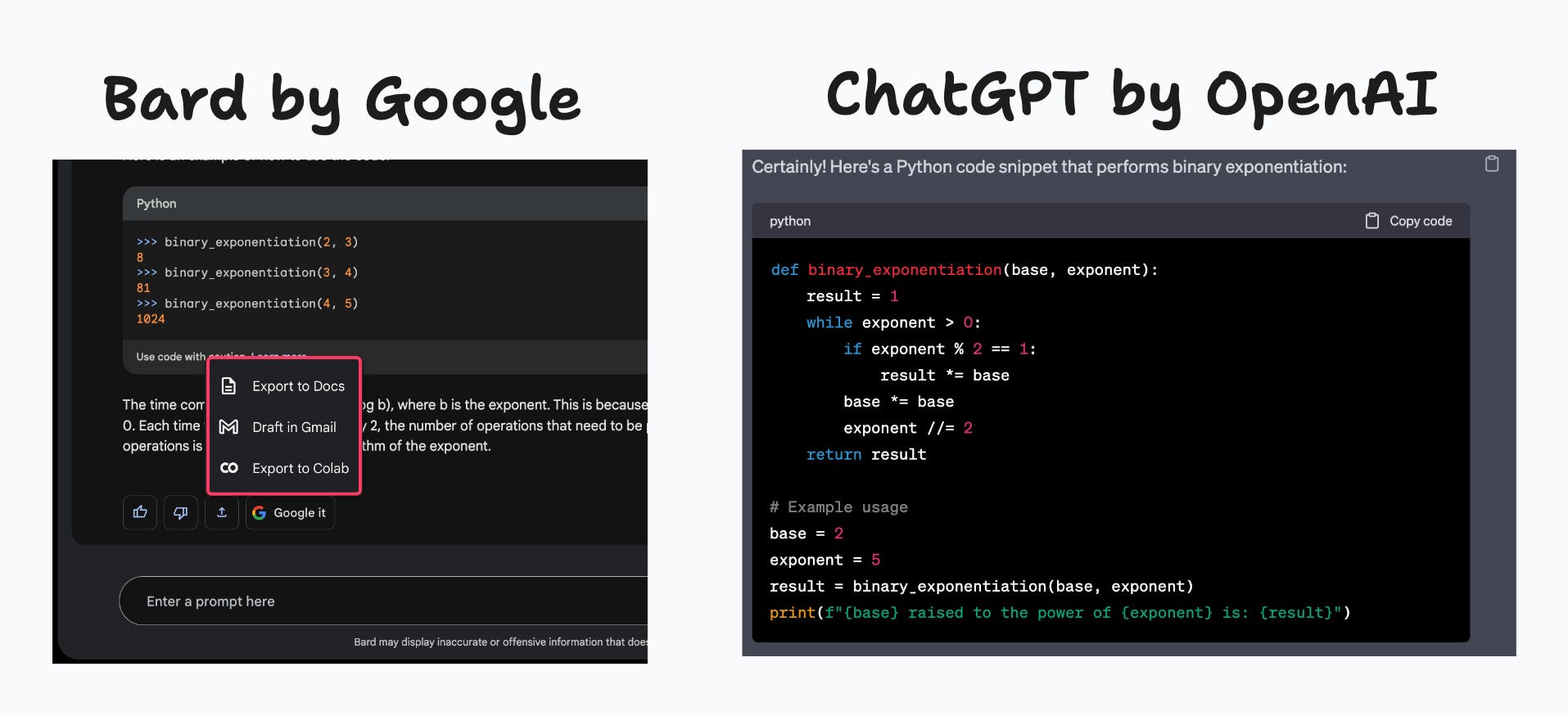

Export options: Bard allows you to export the response as Docs or a Draft in Gmail. If it detects code then it also gives an option to "Export to Colab" but ChatGPT doesn't have any such functionality yet.

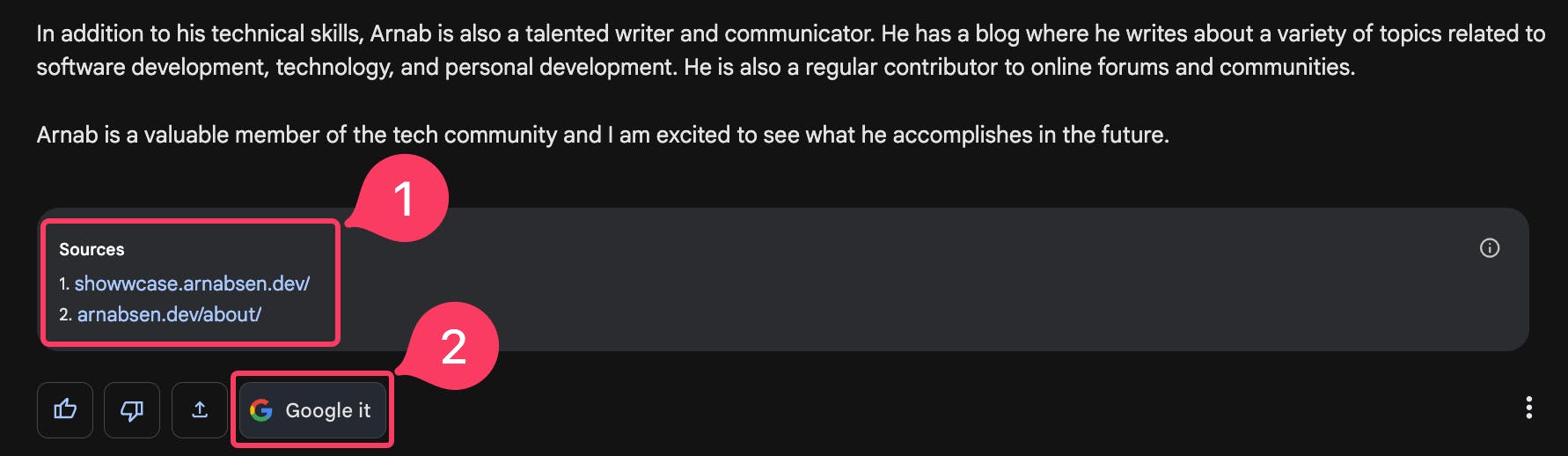

Citations: Bard can also cite sources, something which ChatGPT still can't do.

Further, Google is promising that in the next few weeks, Bard will become more visual. Bard will also be integrated with Google Lens, where it can analyze the photos and then respond according to your prompt.

🎨 Bard x Adobe Firefly

This collaboration will allow Bard users to generate images directly from their text descriptions, using Firefly's state-of-the-art technology.

To use this feature, Bard users will simply need to type a description of the image they want to generate into the chatbot. Firefly will then use its AI to create an image that matches the description. Users will be able to edit the image as needed, and they can even share it directly on social media.

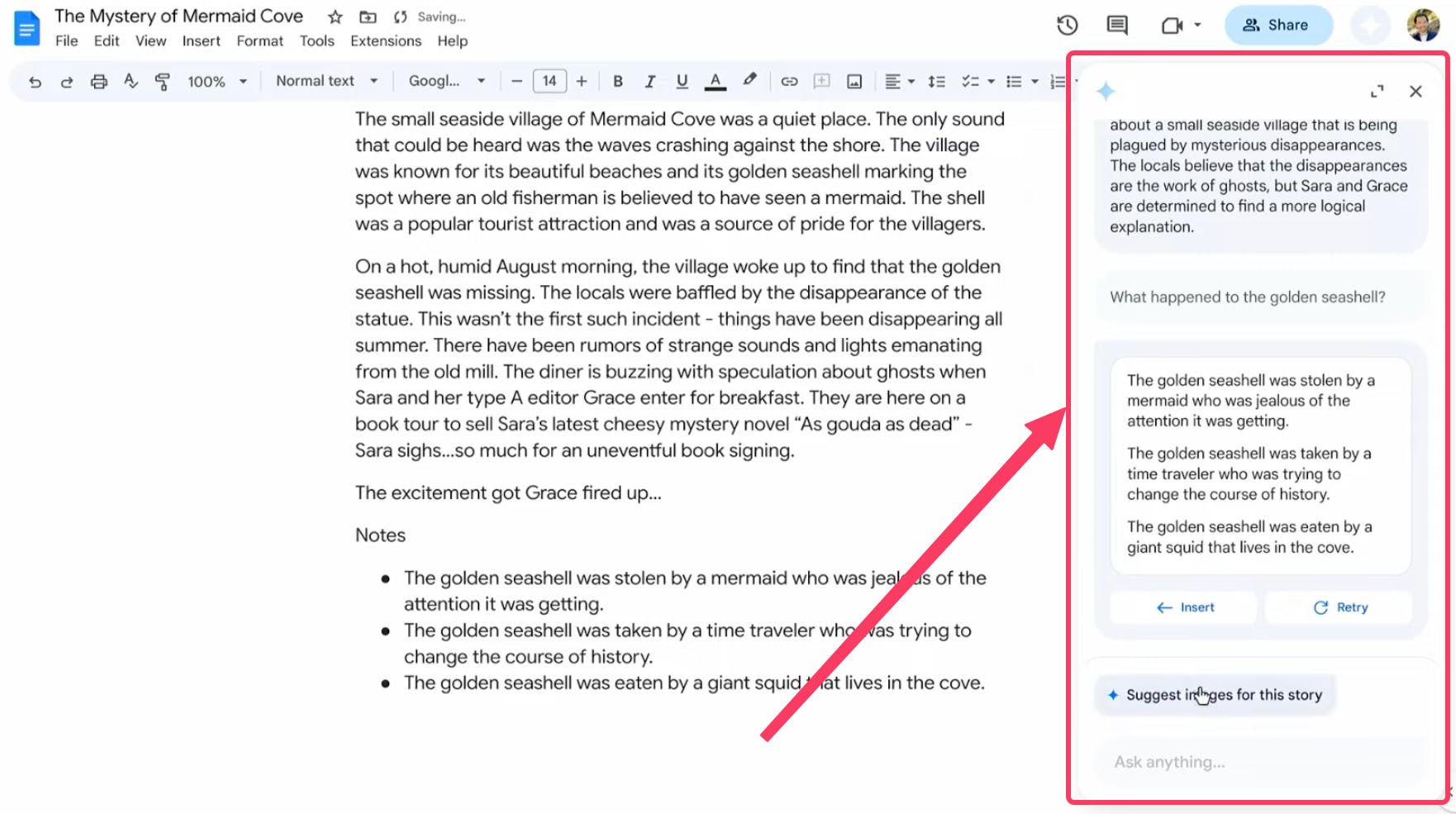

💼 Workspaces

Some of the popular tools available in Google Workspaces like Docs, and Sheets will have options for users to prompt specific requirements. From generating job descriptions, and creating tables with data inserted, to creating images with specific styles everything can be done with just a simple prompt directly from these tools. You can do these with a side panel called "sidekick" which will keep track of the context and then provide relevant information. This will make prompting very easy.

All of this will be generally available to business and consumer Workspace users later this year via a new service called Duet AI for Workspace.

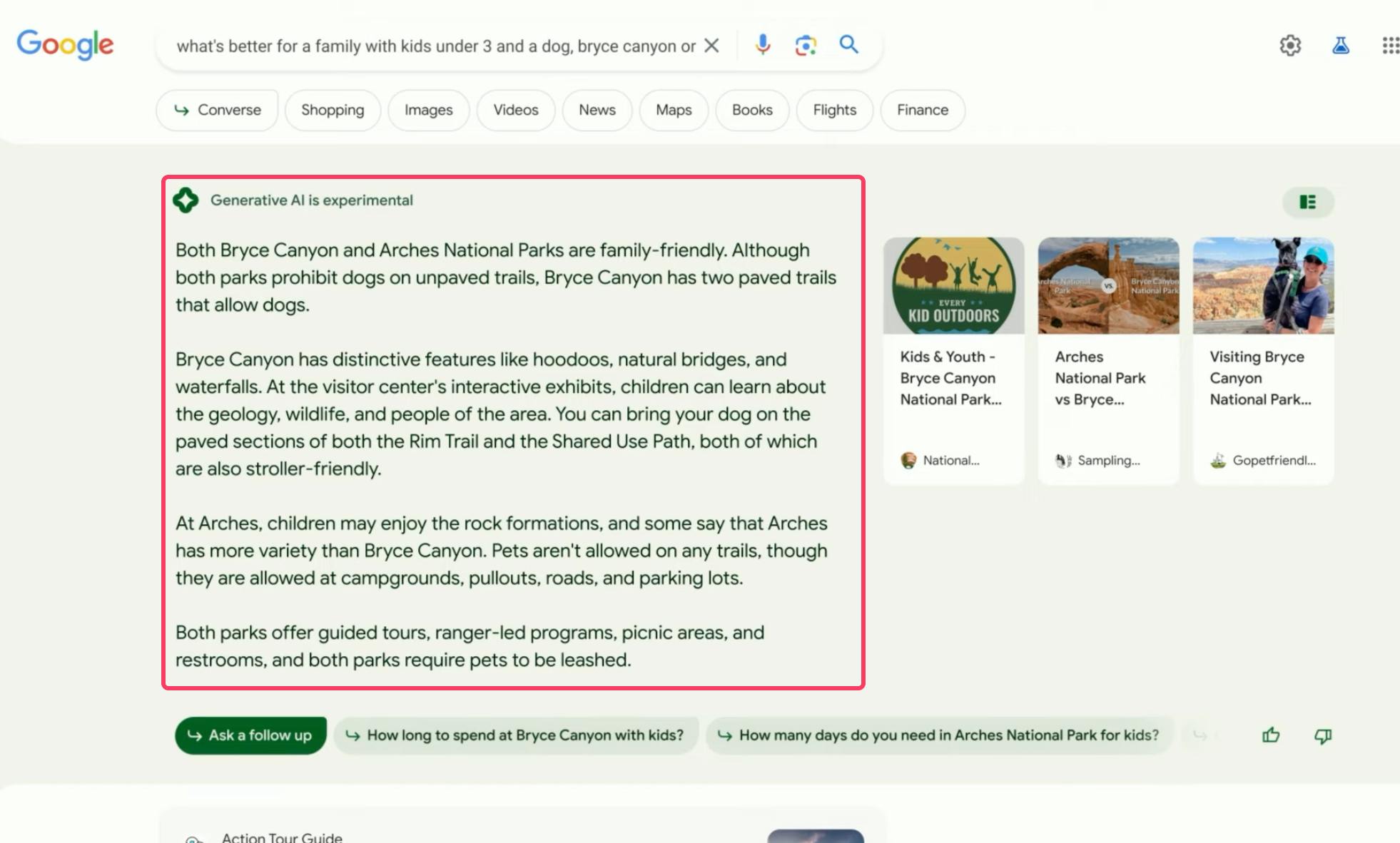

🔍 Generative AI in Search

A typical Google Search can be quite challenging, to be honest. To obtain very specific information, you must break down the text you are searching for and then skim through the results to find the precise answer.

But with this new integration of Generative AI, you don't have to do so anymore. It will automatically take care of extracting the information, getting relevant results and then compiling the results to give you the perfect response.

✳️ Vertex AI

Vertex AI is a managed machine learning (ML) platform that helps you build, deploy, and scale ML models. It offers a unified experience for managing the entire ML lifecycle, from data preparation to model training and deployment. Vertex AI also provides a variety of tools and services to help you accelerate your ML projects.

Other big techs are already availing this functionality in their apps, like:

replit

Uber

Canva

Vertex has the support of 3 new models, in addition to PaLM2.

Imagen: which powers image generation, editing, and customization from text inputs.

Codey: for code completion and generation, which can be trained on code base to help build applications faster.

Chirp: universal speech model which brings speech-to-text accuracy for over 300 languages.

All of these features are already in Preview and can be used.

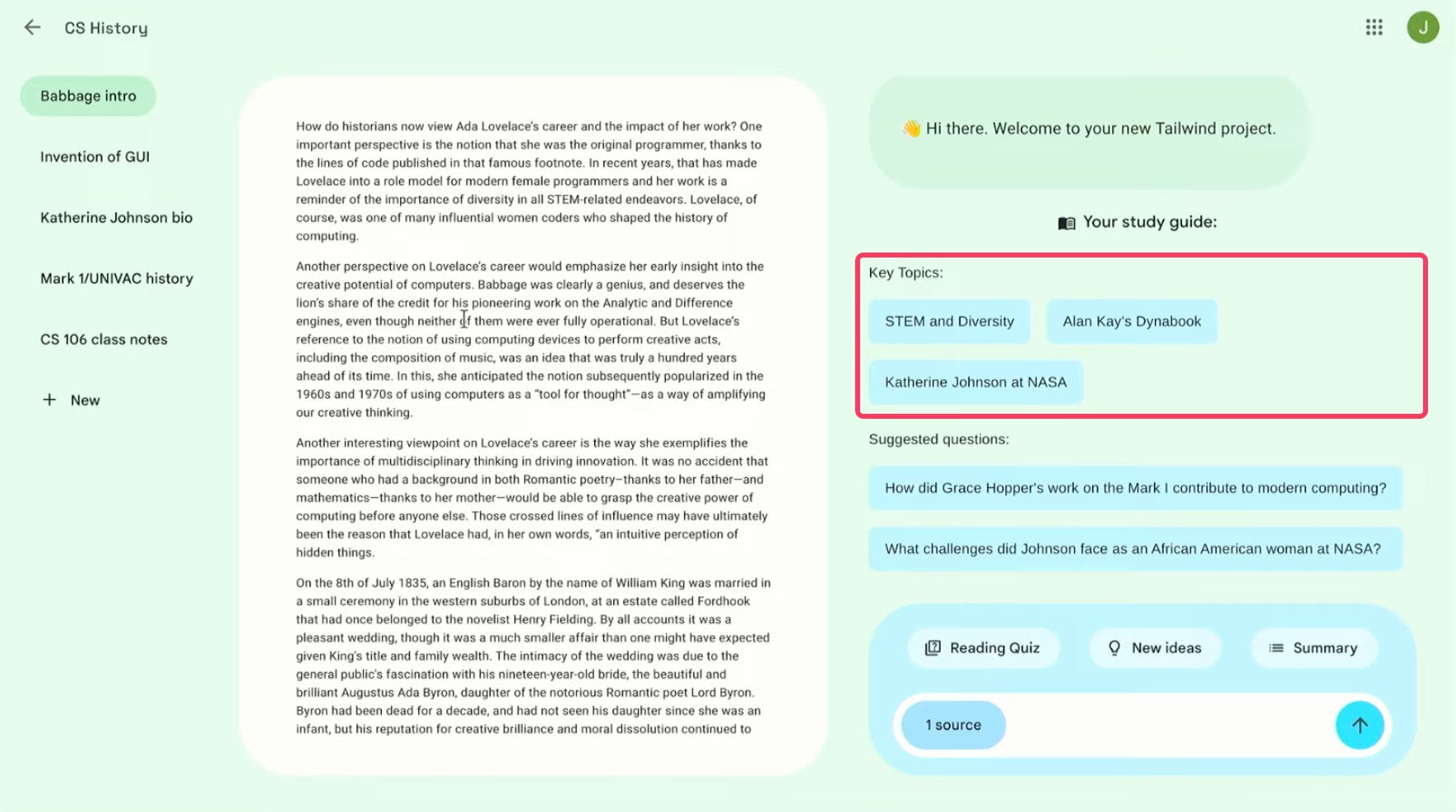

📔 Project Tailwind

Project Tailwind is an experimental AI-first notebook that learns from your documents and helps you learn faster. You can upload the docs using Google Drive and that creates a custom fine-tuned model for you to interact with.

It can be used:

to create study guides

find sources

generate quizzes

do a quick Google search to get more information.

Conclusion

Google I/O 2023 unveiled groundbreaking AI advancements and integrations, showcasing the power of AI to revolutionize the way we live, work, and interact with technology. These innovations promise to make our lives more efficient, connected, and inspired as we move toward a brighter future.

As we embrace the future of AI, let's harness its power to innovate, improve our lives, and create a world where technology is a catalyst for positive change, empowering us to reach new heights and achieve our greatest potential.

References

If you found this blog useful, do leave a comment and like this blog, it really motivates me to put forward more such content. You can follow me on Twitter for further updates. My Twitter handle: @ArnabSen1729.

You can find the rest of my social links at arnabsen.dev/links. Thank you and have a wonderful day.